Secure Data Lakes

Securely Process Sensitive Data (PII/PHI) in Data Lakes

Democratize your data effortlessly while ensuring data privacy, compliance, and security - all with the simplicity of an API

- Trusted by Engineering Teams Building AI at Scale

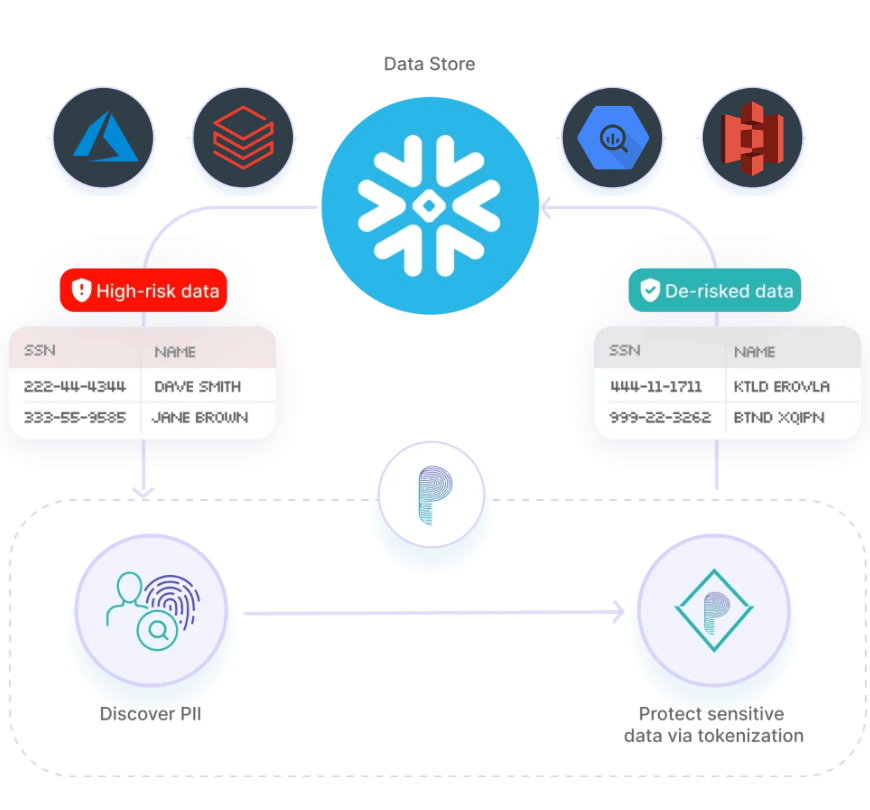

Data Masking (Tokenization) for Data Lakes

Integrate Protecto APIs into your ETL to identify and mask PII and other sensitive data. Securely use your data for analytics, AI training, and RAG

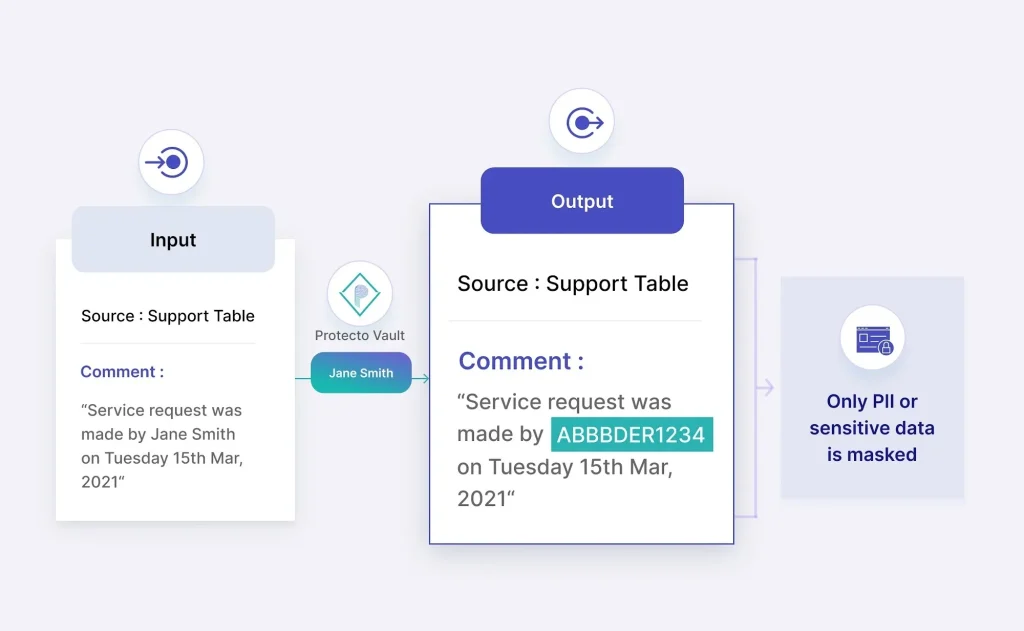

Scan Structured and Unstructured Data

Scan and mask sensitive data (PII/PHI) across structured or unstructured text. Leverage the masked data for analysis, sharing, and RAG while keeping sensitive data locked.

Maintain Data Utility

Unlike other masking tools that distort data, Protecto’s intelligent tokenization preserves data context and integrity. Enjoy accurate analysis and AI responses with consistent, format-preserving masking

Controlled Access to PII/PHI

Unmask the data when needed. Grant authorized users access to original data when needed, maintaining control and security.

Want to learn how to identify PII in your data lake and protect it?

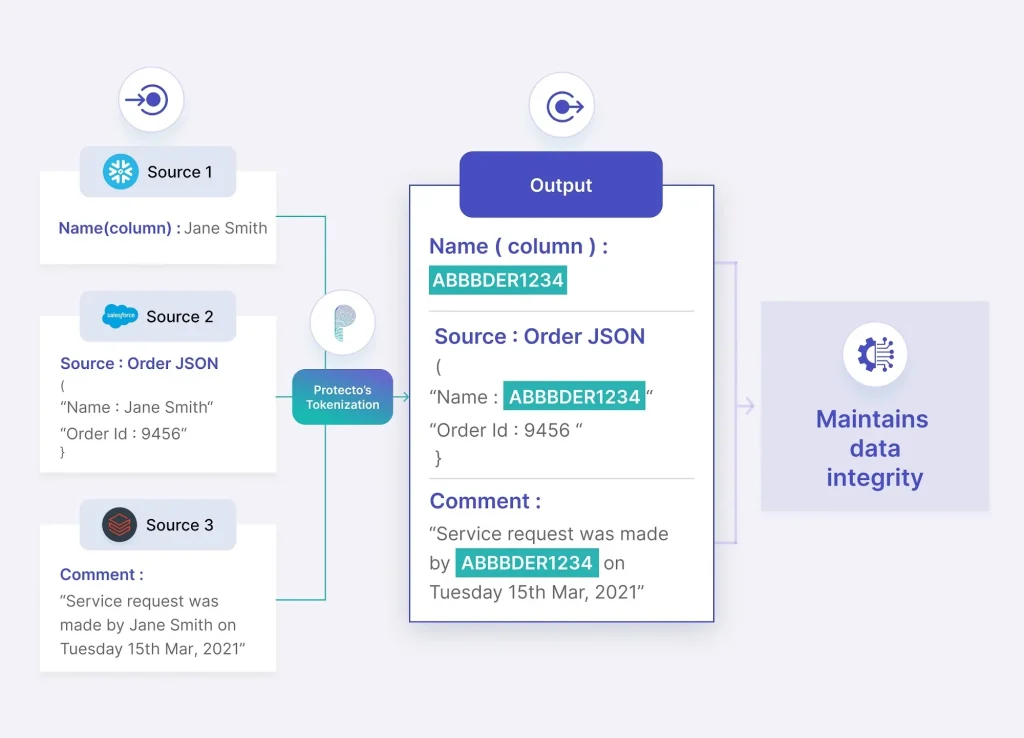

Protect Your Sensitive PII Across Systems

Protecto consistently masks sensitive data across all your sources, so you can easily combine and analyze data without losing valuable insights

Enhanced Data Privacy & Security

Replace sensitive PII/PHI data with masked tokens to safely use it for analytics, AI development, sharing, and reporting, minimizing privacy and security risks

Easy Data Lake Integrations

Protecto APIs and connectors supports all popular storage such as Snowflake, Databricks, S3, Azure Data Fabric, BigQuery and more

Improved Privacy and Compliance

Meet privacy regulations (HIPAA, GDPR, DPDP, CPRA etc.) requirements by masking PII and tightly managing sensitive personal data

Data Protection Across Systems

Confidently share data across systems without privacy concerns or inconsistencies. Simplify data exchange, synchronization, and integration by consistently tokenizing sensitive data.

Safe Data for Testing and Development

Mask PII and other sensitive data from production data when creating test data for development and testing, enabling a safer development

Adopt Gen AI Without PII Risks

Use the data for AI and with Large Language Models (LLMs), without exposing PII/PHI while maintain AI accuracy

Sign up for a demo

Why Protecto?

Protecto is the only data masking tool that identifies and masks sensitive data while preserving its consistency, format, and type. Our easy-to-integrate APIs ensure safe analytics, statistical analysis, and RAG without exposing PII/PHI

Easy to Integrate APIs

Our turnkey APIs are designed for seamless integration with your existing systems and infrastructure, enabling you to go live in minutes.

Data Protection at Scale

Deliver data tokenization in real-time APIs and asynchronous APIs to accommodate high data volumes without compromising on performance

Pay as You Go

Scale effortlessly and protect more data sources with our flexible, simplified pricing model

On-Premises or SaaS

Deploy Protecto on your servers or consume it as SaaS. Either way, get the full benefits including multitenancy

Secure Privacy Vault

Lock your sensitive PII in a zero-trust secure data privacy vault, that provides a robust solution to store and manage sensitive PII securely

Want to try Protecto in a sandbox?

Frequently Asked Questions

To enable various business objectives, such as analyzing marketing metrics and reporting, an organization might need to aggregate and analyze sensitive data from various sources. By adopting tokenization, an organization can reduce the instances where sensitive data is accessed and instead show tokens to users that are not authorized to view sensitive data. This approach allows multiple applications and processes to interact with tokenized data while ensuring the security of the sensitive information remains intact.

No, tokenization is a widely recognized and accepted method of pseudonymization. It is an advanced technique for safeguarding individuals’ identities while preserving the functionality of the original data. Cloud-based tokenization providers offer organizations the ability to completely eliminate identifying data from their environments, thereby reducing the scope and cost of compliance measures.

Tokenization is commonly used as a security measure to protect sensitive data while still allowing certain operations to be performed on the data without exposing the actual sensitive information. Various types of data like credit card data, Personal Identifiable Information (PII), transaction data, Personal Information (PI), health records, etc. can be tokenized.

Real-time token generation happens in sub-seconds. This implies that the tokenization algorithm or method used is highly efficient and can handle large volumes of text in real-time applications without causing significant delays or bottlenecks.